Deep Learning-based 6-DOF Grasp Estimation for Industrial Bin-Picking

Keywords: grasp planning, deep learning, bin-picking, 3D simulation, pybullet, NxLib, API integration, socket programming, INtime RTOS, python, C++

Overview

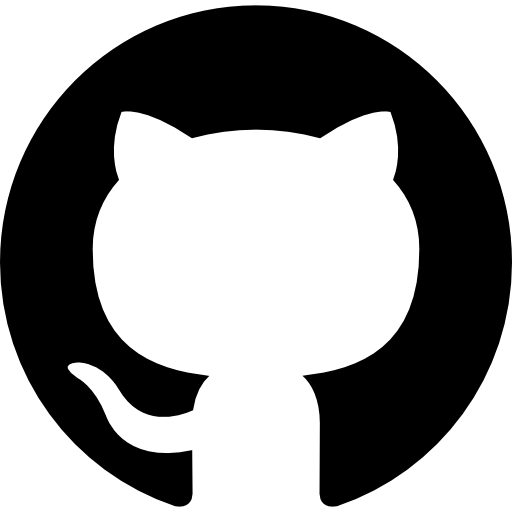

This project focus on the problem of the 6-DoF parallel-jaw grasp for bin-picking in an industrial setting. We explore a method to estimate 6-DoF grasp from bin-picking scenes in an industrial setting using a convolutional neural network (CNN) without having to estimate the 6D pose of the target object. We gained inspiration from the idea that the robot does not need to know exactly the precise 6D pose of the target object to get a reasonably good grasp. A rough estimate on how to approach the object is often more than sufficient to grasp the target object. We use what we call grasp approaching pose vector which determines from which direction the robot gripper should approach the target object in Cartesian space. The network evaluates the grasp candidates represented as grasp rectangles taken from a single depth image and outputs the 2D projection of the grasp approaching pose vector at once. This 2D projection can later be converted back to a 3D vector with the knowledge of the camera intrinsic matrix. For more detail, please take look at Deep Learning-based 6-DoF Grasp Estimation for Industrial Bin-Picking (manuscript in preparation).

Network Architecture

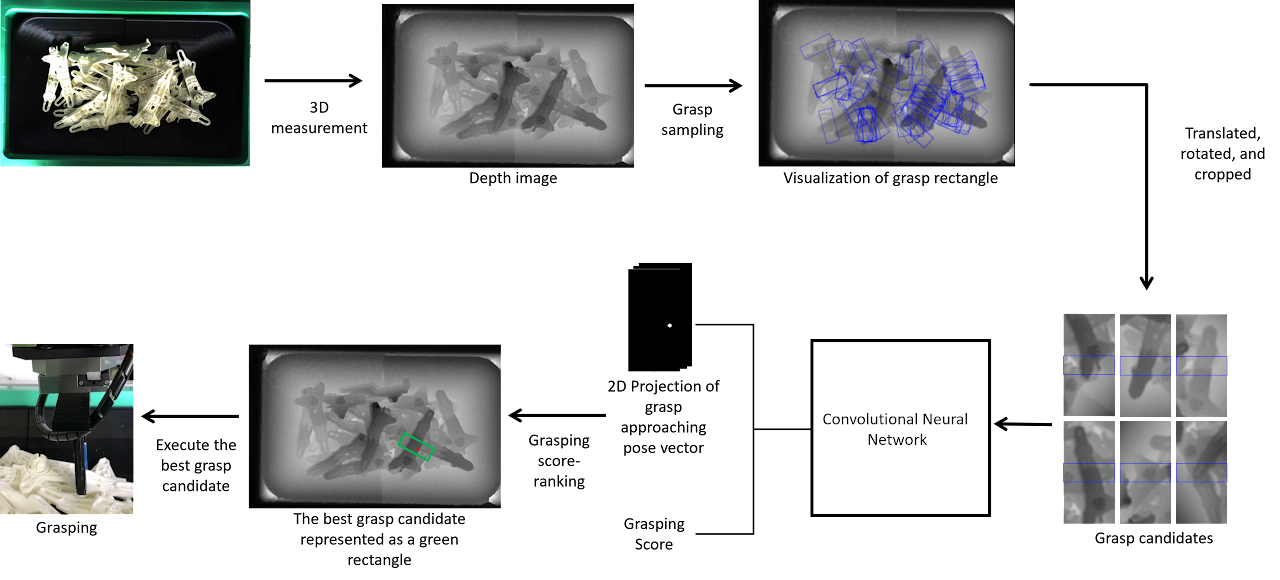

The proposed network architecture is shown in the figure below. The network takes inputs of individual grasp image and outputs the grasping score and the 2D projection of the grasp approaching pose vector. The network is divided into three parts: feature extractor, grasp pose estimator and grasp quality estimator. The feature extractor consists of 4 stages of convolution processes where each stage is comprised of 2 units of residual blocks. The grasp pose estimator consists of 3 stages of deconvolution processes, and each stage is also comprised of 2 units of residual blocks

Inspired from the architecture of U-Net, the output of the first three stages of convolution processes in the feature extractor part are forwarded and concatenated to the input of corresponding deconvolution processes with the same size as shown in the figure above. The last part is the grasp quality estimator which consists of 2 dense layers with 512 and 256 nodes each. Instead of using max-pooling with fixed sliding window size, we use global max-pooling for the input of the first dense layer in the grasp quality estimator to make sure that the network can take an arbitrary size of input images

Experiment with A Real Robot

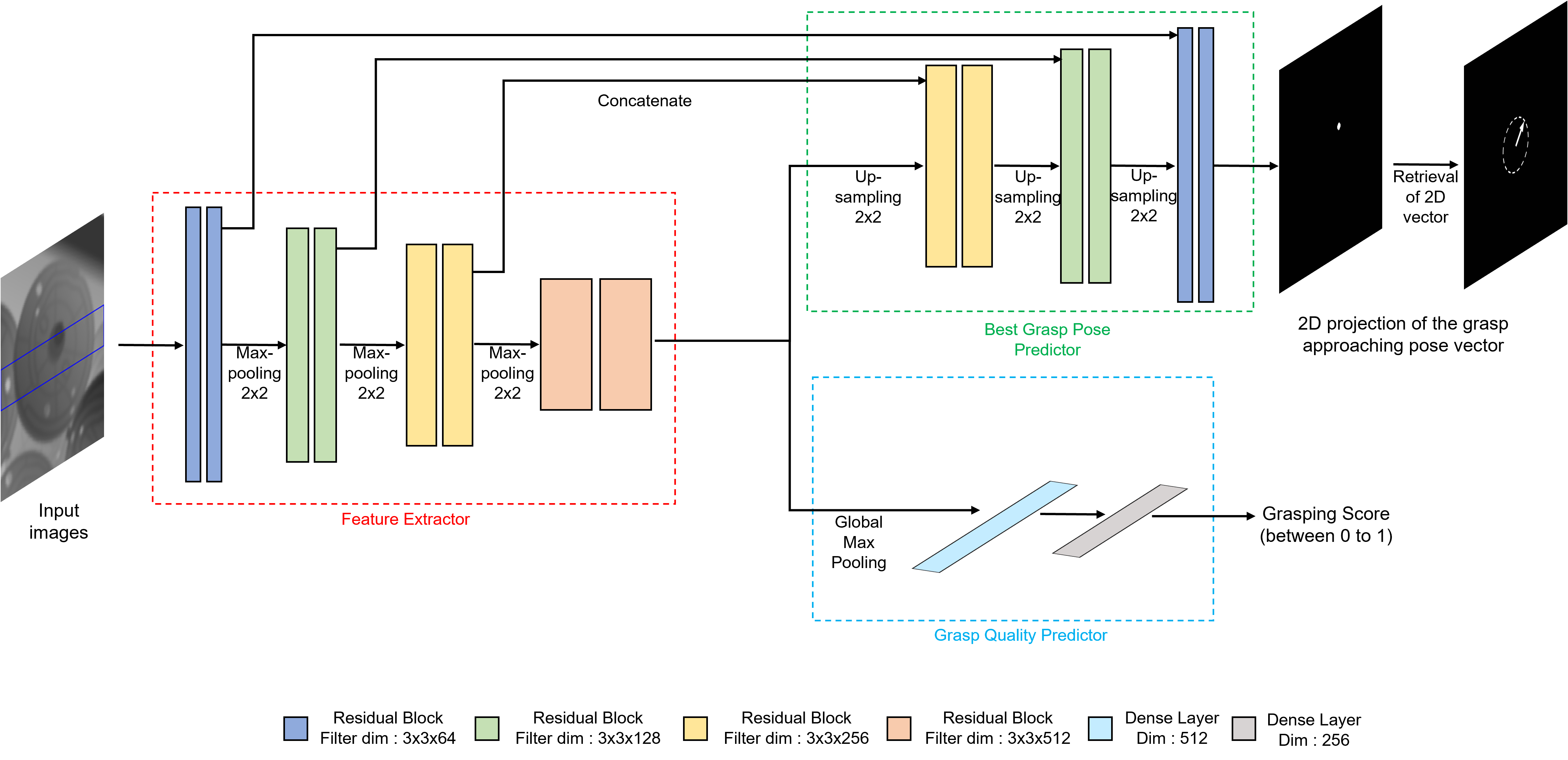

We evaluate the proposed method by conducting real bin-picking experiments. The experimental setup of the experiment is shown in the figure below. We used a 6-DoF Denso robotic arm as the manipulator, a pneumatic parallel gripper, and an Ensenso N-30 depth camera with 1280 x 1024 resolution for the 3D sensor.

We validated our method with 4 types of industrial objects. We found that the proposed method performed reasonably well in all experiments with a grasp success rate ranging from 84.21% to 89.62%. The experiment video can be seen below.